Understanding 360 Style Transfers

I designed and implemented a new approach for applying style transfers to 360 pictures and video. Using contemporary and historical paintings and images from Google Street View, I created art that encourages viewers to appreciate what is beautiful or poignant about the modern world, and to see it with new eyes.

To understand what this is about, let's first review what a style transfer is and how it works.

A style transfer is a computational technique involving a neural network that can re-imagine a photograph in the artistic style of a painting. Put more simply, if you give it a photograph and a painting, it will output something that has the same content as the photograph, but in the style of the painting.

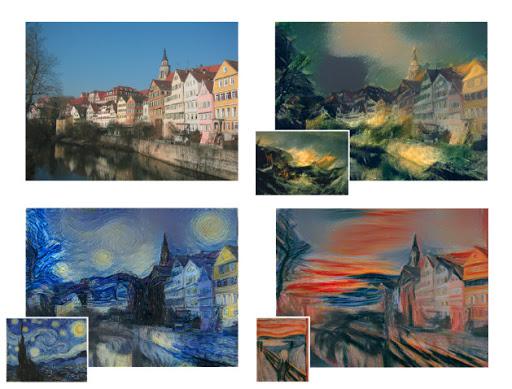

From A Neural Algorithm of Artistic Style (2015) by Leon A. Gatys, Alexander S. Ecker, Matthias Bethge

Compare the row of houses photographed in the upper left corner with the other three images. You see that the three images still look like the same row of houses but they seem to mimic the artistic style of famous paintings by Turner, Van Gogh, and Munch.

The previous example comes from the seminal paper on the subject titled A Neural Algorithm of Artistic Style by Gatys, Ecker, and Bethge, published in 2015. Since that time many more research papers have been published that iterate on the idea of using a neural network to create images that imitate a painting's artistic style. I've always been fascinated with style transfers and my motivation to explore the topic contributed to my decision to attend ITP.

During a class at school I used the opportunity to obtain images from the Google Street View API and applied a style transfer algorithm to make what looks like animated paintings. I wanted to create 360 style transfers for that class but it was unachievable for me at the time.

Later I was asked to create illustrations for an Adjacent article using style transfers. For this project I developed a new approach I call cylindrical style transfers. This is just like a regular style transfer except that the right and left sides of the image join together seamlessly.

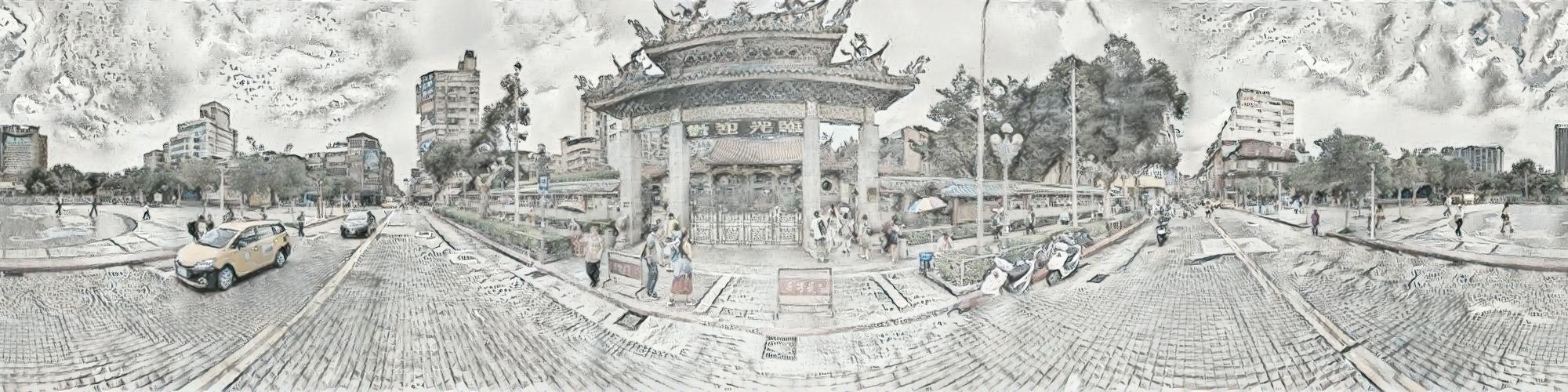

Content Image: Taipei, Taiwan near the Lungshan Temple and Bangka Park. Style Image: Map of "Art and China after 1989: Theater of the World" (2017), by Qiu Zhijie.

This illustrates an important challenge with style transfers. The results of the style transfer algorithm are somewhat random; it also has no way of knowing that the right and left sides of the image are supposed to join together. Therefore, in the above image there is no way to ensure that the styling of the sidewalk and sky on the left side of the image will match the styling of the sidewalk and sky on the right side of the picture. Any differences, even small ones, will cause a noticeable and unpleasant seam. I came up with an approach that solved that problem.

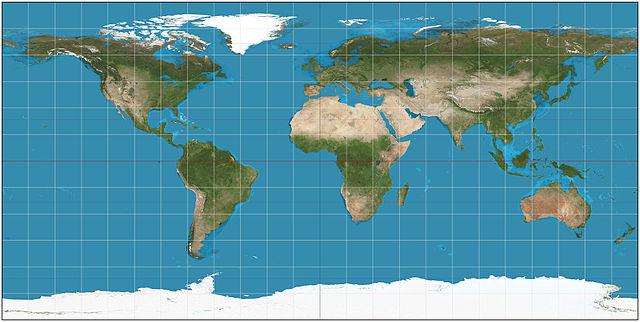

360 imagery uses something called an equirectangular projection to represent the image data. Ideally, a 360 image would be represented with some kind of spherical arrangement of pixels. Unfortunately, computers, and the neural network the style transfer algorithm is based on, can only work with rectangular grids of pixels. An equirectangular projection is an appropriate pixel arrangement that is also computationally easy to work with.

The most straightforward example of an equirectangular projection that surely you've seen before is this world map. Observe that continents farther away from the equator appear stretched out.

By Strebe - Own work, CC BY-SA 3.0, Link

The challenge posed by cylindrical images is amplified with equirectangular images. Not only do the right and left sides of the image need to join together seamlessly, but the entire top and bottom edges need to each join together in a single point similar to the way locations on the map above join together at the north and south poles. In addition, the pixels near the top and bottom of the image are distorted, so the style transfer algorithm needs to be applied in a way that matches that distortion.

One approach for applying style transfers to 360 imagery is to ignore these challenges and let the algorithm do the best it can with an equirectangular projection as it is. This can work but the result often has unpleasant artifacts. Consider the below example. The image contains a single 360 image that you can move with your mouse. If you rotate to the right or left 180 degrees you will see a strange vertical line. If you follow that line up or down, you will notice the style looks as if it is being sucked into a black hole. These artifacts are a direct result of a failure to address these style transfer challenges and ruin the appeal of the result.

In 2018, Ruder, Dosovitskiy, and Brox published the paper Artistic style transfer for videos and spherical images. The researchers addressed the challenges of style transfers applied to 360 imagery by employing a cubic projection to remap the equirectangular projection to a set of six cube faces. The style transfer algorithm is applied to each face of the cube separately. The six styled cube faces are then joined together in a way that blends the seams and creates a new equirectangular projection. This approach eliminates the distortion problems at the nadir and zenith and reduces the appearance of seams. Although this algorithm achieves improved results, it still has visible artifacts caused by the cubic projection. If you watch their demo video posted to YouTube, you might notice the orientation of the cube and the location of the cube's corners.

When I read this research paper I was intrigued; I had recently added efficient 360 rendering functionality to my Processing library Camera3D. I immediately started thinking about alternative approaches. These ponderings stuck with me and grew. My thesis project is the direct result of my attempt to think of a better algorithm for 360 style transfers.

My 360 style transfer algorithm, which is still a prototype, applies the style transfer algorithm developed by Gatys, Ecker, and Bethge to 360 imagery in a way that respects the distortion of the equirectangular projection and (when used properly) is completely seamless. Central to my approach is a new spherical data structure I call a TensorSphere. This data structure can manipulate pixel data using spherical coordinates, allowing me to apply the style transfer algorithm to the entire image at the same time in a way that addresses all of the seam and distortion challenges while also being more computationally efficient than the approaches described previously.

Examples of my style transfer algorithm are available at this site's homepage using A-Frame. You can also see the below image and compare with the similar one shown above.

In the near future I intend to publish a paper detailing my algorithm and share my creation with the artist community. I will provide a user friendly, accessible, and well documented tool that artists can use to create their own 360 style transfers. Along the way I will explore the artistic potential of this new tool while creating meaningful art.